The entire quality of BPMN (Business Process Model and Notation) systems directly depends on how effective the interaction between the software product and the end user is. This refers to the clarity and speed of the reaction, the accuracy with which a particular application processes the request, and the number of possible interactions per unit of time or during the execution of a transaction. The method of separation of transactions using a simpler and more unified method is called transaction processing, which is the basis for any transaction processing system.

Corporate customers try to ensure the round-the-clock operation of systems online, or in other words, with the provision of real-time transaction processing or streaming processing – this is the processing of data for a short time, which provides an immediate result. Examples of online regimes are bank ATMs, traffic control systems, trading terminals (POS), a system of micro-financial loans, and more.

That is, we say that low productivity is almost the main problem that affects the end user and affects the reputation of companies. The current customer audience of service applications is counting on sensitive, high-performance business products with a response rate of less than a second, regardless of how the application interacts with a particular customer.

Therefore, low-code platforms must solve this problem, while maintaining all the advantages of effective application development. How is it possible (and with the help of what!) to increase the efficiency of BPMN systems – we will find out now.

Note that this is a common misconception when platforms with Low-code or No-Code, with their extensive system of tools and concepts, create applications that run slowly, without the possibility of optimization through levels that are above the actual access to and processing of data. Needless to say, the problem is relevant, but there are ways to solve it and preserve the advantages of efficient processing of low-code options for the product.

High-level optimized “recommended” components

Modern BPMN-low platforms contain relatively rigid directive components designed and created for flawless and effective collaboration.

This is believed to be the universal key to solving many performance problems that most such systems face.

These components can be highly organized and branched, read, “business-ready” elements that provide basic functionality for the business user.

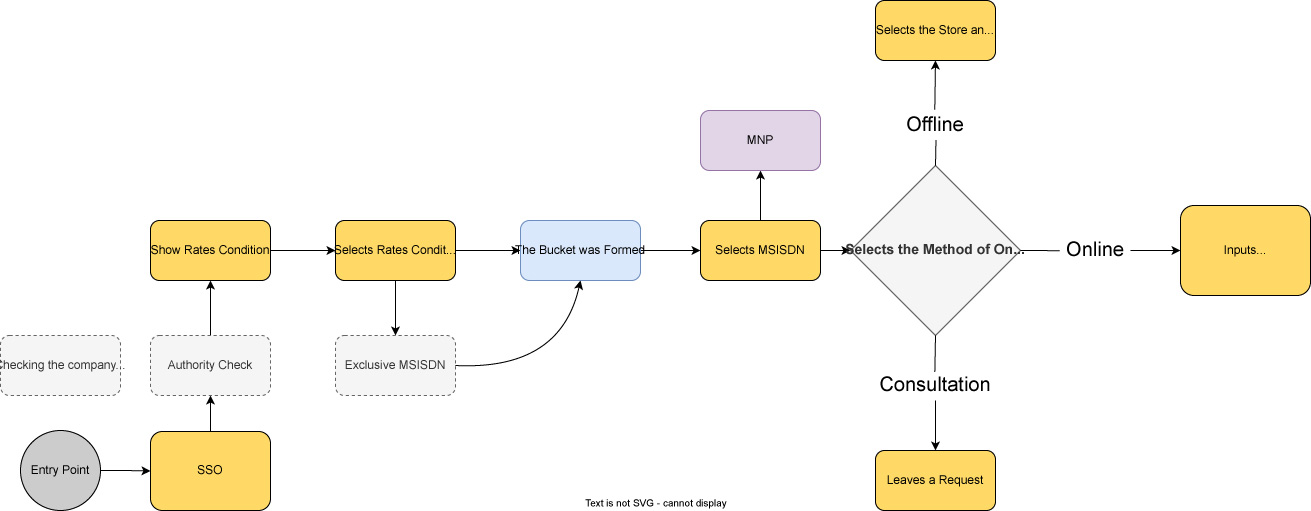

However, in real life, this universal key is leveled by the creation of multi-level and cumbersome scenarios of business logic (puzzles), possible combinations of which are not taken into account at the level of each component of this “puzzle” of business logic. To solve this problem, it should be possible to redesign all the components of the “puzzle” automatically – thus creating a new connected and optimized speed of execution component. This can be created in such a way that user and data interaction is highly optimized, such as a dynamic form that can request a lot of information from a database to respond to customer interactions.

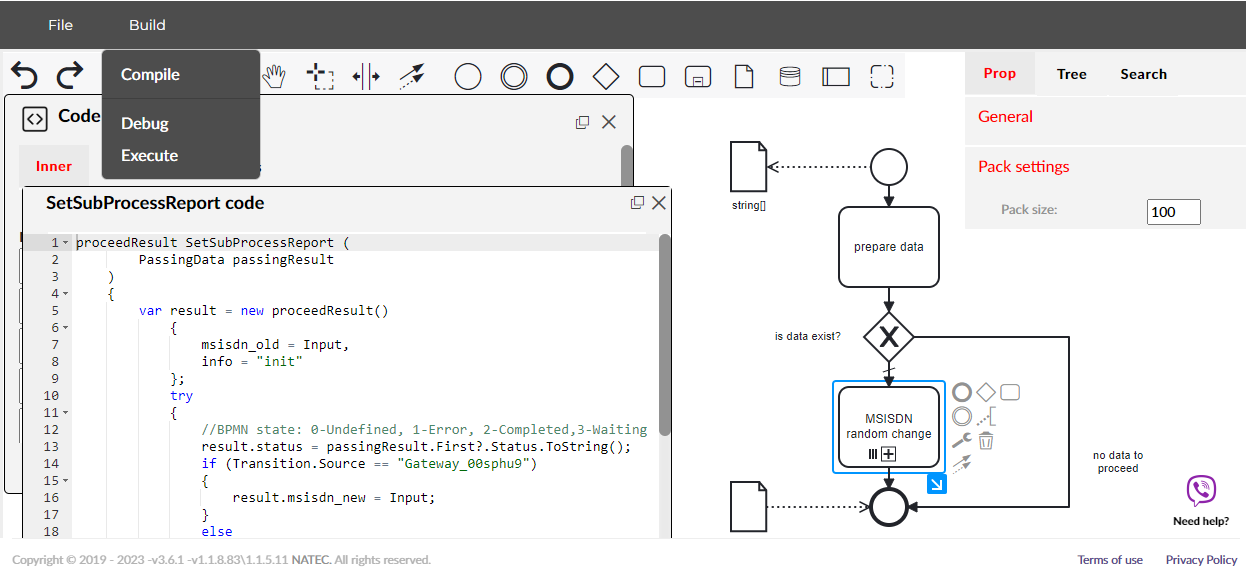

Experts MEF.DEV note that the quality and performance of program execution for BPMN low-code platforms depends on the presence of a holistically compiled and optimized version of the application for transactional execution, and the programming language instead of the interpreted script.

An optimized dynamic component can collect user events, prioritize them, process and thus communicate with the database in a very efficient way. Since optimization during the compilation of all components (in this example, the puzzle of business logic) is a standard function of the BPMN low-code platform, and the logic itself can be optimized, a developer who resorts to developing a product based on low-code does not need to worry about unnecessary nuances, and he can easily create an adaptive program with effective data application.

Kernel-level approach

“At the kernel level” is a term used in programming that means providing direct access to the desired resource. Some low-code platforms allow you to organize and provide direct access, that is, while maintaining the advantages of low code, they also allow the developer to carry out further optimization at the core level of the system, and to do this if necessary in complex basic systems that may require it. A typical example here is how low-code platforms provide data management capabilities.

Two main approaches to data management:

-

Hide the actual database and provide the user with a higher-level data model toolkit – object-relational data display Object-Relational-Mapper (ORM), which operates on all the source information and can transform this data automatically and unnoticed by the developer.

-

Provide direct access to the database and make the platform process the data scheme in a more flexible way, in fact, according to the developer’s directives.

Both approaches have advantages for using the organization of highly loaded systems, and the second is optimally considered the best. And here’s why. The ORM approach allows you to abstract from the database and greatly simplify the necessary source code by automatically matching data attributes (Data mapping), and this means significantly speeding up the programming time primarily during the Debugging stage. In addition to the speed of use, the ORM approach protects the level of access to data from attacks. But applying ORM in heavily loaded transactional environments can reduce performance since this is an additional layer (Layer) to the system.

Allowing full access, the user approach can be used to create high-performance databases for complex core systems, with third-party tools also being used for optimization. For teams that develop complex core systems, this guarantees that they will not be embedded in the “ceiling”, but will be able to create high-performance branched systems and optimize them as needed using the selected tools.

In practice, experienced architects use both approaches and level performance risks, laying the possibility of choosing a strategy for working with data, namely, basic development based on the ORM approach and the use of loosely coupled code (combining weakly dependent pieces of code on the fly through inversion of control and dependency injection patterns) for critical elements of systems or System integration.

What is the difference between scripting and programming languages?

All scripting languages are programming languages. The theoretical difference between the two is that scripting languages do not require a compilation stage and are interpreted at runtime. For example, usually, a C program needs to be compiled before running, while in the usual format, a scripting language like JavaScript or PHP does not require it. However, this is not always relevant. As already noted, in the matter of performance in BPMN low-code, programming languages with compiled versions of the product still play the first violin, rather than interpreted scripts (scripts).

And here’s why.

As a rule, compiled programs work faster than interpreted ones, because they are initially converted into “native” machine code. In addition, compilers read and analyze the code only once and jointly report errors that may be in the code, but the interpreter will read and analyze the code operators every time he encounters them, and will only stop when he encounters some kind of error. In practice, the difference between compiled and interpreted products can be somewhat blurred due to the improved computing capabilities of modern hardware and progressive coding methods. But we understand that even a small difference between languages and standards means a delay, including in time, and time is an increase in TPS (Transactions_per_second).

Another point to note is that when classifying a language as a scripting language or programming language, one should take into account the environment in which it will be executed. The reason why this is important is that we can develop an interpreter for the C language and use it already as a scripting language, and at the same time how it is possible to create a compiler for JavaScript and use it as a language without scripting (compiled language). A runtime environment is the state of the target machine, which can include software libraries, environment variables, or something to support the processes in the system. A living example of this is V8, Google’s Chrome JavaScript engine, which compiles JavaScript code into machine code rather than interprets it (Just-In-Time) using a JIT compiler (Just-In-Time).

Note examples of programming languages traditionally used with a specific compilation step – these are C, C++, Java, and C#. At the same time, examples of scripting languages traditionally used without an explicit compilation step are, in particular, JavaScript, PHP, Python, and VBScript. Consider the differences in the form of a table:

|

Scripting languages |

Programming languages |

|

|

Features of language use |

The program consists of procedure names, identifiers, etc. that require mapping to the actual memory location at runtime. |

Programming languages are of three types: -Low -Intermediate level -High-level |

|

Sphere of application |

Commonly used to create dynamic web applications |

Used to write computer programs. |

|

Dependence |

These languages require an interpreter because they are designed for a specific runtime environment that must interpret the script and execute it. |

These languages are self-executing (Executable) |

|

Performance features |

The interpreter must associate the static source text of the program with the dynamic actions that must occur during the execution process. |

These are high-speed languages that do not require additional actions at runtime. |

|

Example languages |

Bash, Ruby, Python |

C++, Java, C# |

|

Portability |

Scripting languages can be easily migrated to different operating systems and processor architectures. |

Programming languages depend on the architecture of processors (x86/64, ARM, and others) |

Consequently, the problems of low system performance can be solved at the level of technical architecture and the correct use of programming languages, that is, the compiler, and the program’s components. Elements that follow the general construction logic are selected and included in the platform based on their performance.

In a well-designed BPMN low-code platform, the elements do not affect the overall efficiency of the system, since performance as such is already embedded in the low-code architecture itself and system components. If this is required by the complexity of the project, then some low-code platforms, using the “at the core level” approach, open up the possibility for specific optimization.

So, when we talk about the performance of BPMN low-code platforms, we must predict: for them to be fast and adaptive, they must be compiled for instant self-execution, and not be focused on execution in the interpreter environment, experts advise MEF.DEV.

Low-code and BPM systems: consolidation or not?

Modern methods for BPMN-based low-code solutions: digital sovereignty and ergonomics of resources